This article explains how we test Kuzzle and, while we aim to go as high as possible, why we do not plan to boast about 100% code coverage yet.

WHAT WE THINK ABOUT CODE COVERAGE

THE GOOD

A good code coverage is a proof that the code is tested. It’s a message basically saying: “you can use this project: we made sure that it will work as intended for basic uses”.

Of course, bugs can still be found. New use cases can arise demonstrating a weakness in a project architecture, but you know that when trying a well-tested project it should most of the time behave correctly. Moreover, people behind this specific project architecture are dedicating every resource to guarantee that.

THE BAD

Several kind of code statements are very time consuming to test, with little quality gain. For instance, testing simple getters/setters, simple library wrappers, or some error catches that have been put here as safeguards for calls not returning any error… yet.

Several solutions do exist to cover these cases: the most obvious one is to use special keywords interpreted by your code coverage tool to ignore some part of your code. While this is usually a good solution, in some cases this may lead to lots of added keywords, impeding code lisibility.

You may also remove some of this untested code, weakening your architecture or you can force your code coverage tool to pass through these statements by using heavy, repetitive and usually meaningless stubbing.

THE UGLY

Code coverage is just an indicator. Like every indicator, when you see them as a goal instead as a mean of measurement, you can artificially boost it. High code coverage can easily be obtained by making sure tests go through several code statements without testing specifications or documentation.

Doing so is practically reversing the purpose of code testing: instead of measuring how well your code is tested, it is possible to make sure a good coverage score is reached.

Moreover, code coverage shows only the result of unit tests. These kinds of test are limited by nature, as they make sure that individual part of codes behave correctly, independently of each other. They must be completed with functional tests, whose purpose is to ensure that these different parts interact correctly together.

That’s why some practices like Test-driven development (TDD) are more and more popular: to make sure tests are done properly, not just to show a good indicator.

KUZZLE TEST POLICY

OUR RULES

There are a few rules we follow when testing Kuzzle and its associated sub-projects.

These rules are common sense:

- test the core methods as thoroughly as possible

- make sure that the code complies to specifications and documentation

- when finding a bug, add a unit or functional test making sure that this bug does not occur again

- use code coverage to detect code that is not sufficiently tested

- don’t ask the code coverage tool to ignore code

CURRENT CODE COVERAGE ANALYSIS

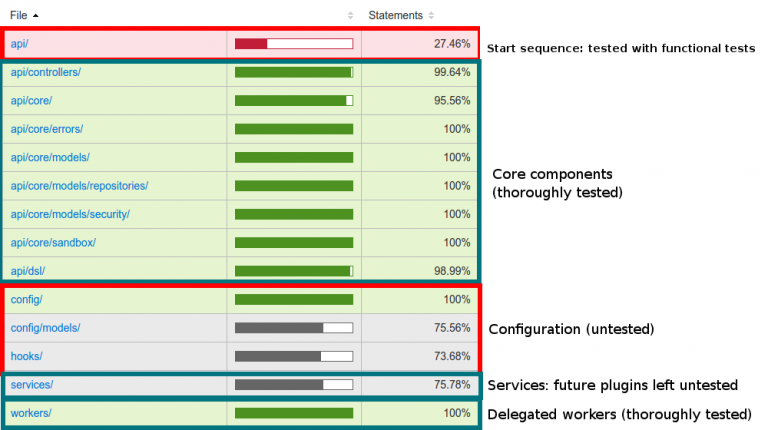

The table below is the detailed result of our current coverage on Kuzzle’s main project. It has been generated with our main code coverage tool, Istanbul:

As you can see, even if our badge shows a current coverage score of 89%, in fact what remains to be covered is unimportant:

- a startup sequence that is already tested using functional tests

- various configuration files with no real code in them

- services so rarely used that we already planned to remove them from Kuzzle’s core

- pieces of code on which tests would be meaningless, like this one:

function sh(command) {

return childProcess.execSync(command).toString();

}

CONCLUSION

Tests are an important part of any project and code coverage is a very good indicator of how well a project has been tested.

However, there is one vital caveat: it is very easy to be lured into thinking that a project must reach a code coverage score of 100%. Doing so, we transform a simple indicator into a goal, diverting it from its purpose: to measure how well a code is tested.

A project must first be tested against aknowledged specifications and documentation. Then the code coverage result must be analyzed to spot parts of the code that are insufficiently tested and add the appropriate tests. Once done, the global score should naturally be very high, and the parts left untested must be identified, with a valid “no test” reason.